How Leading Brands Are Increasing Revenue With Marqo

Agentic product discovery that drives sales

Agentic product discovery that drives sales

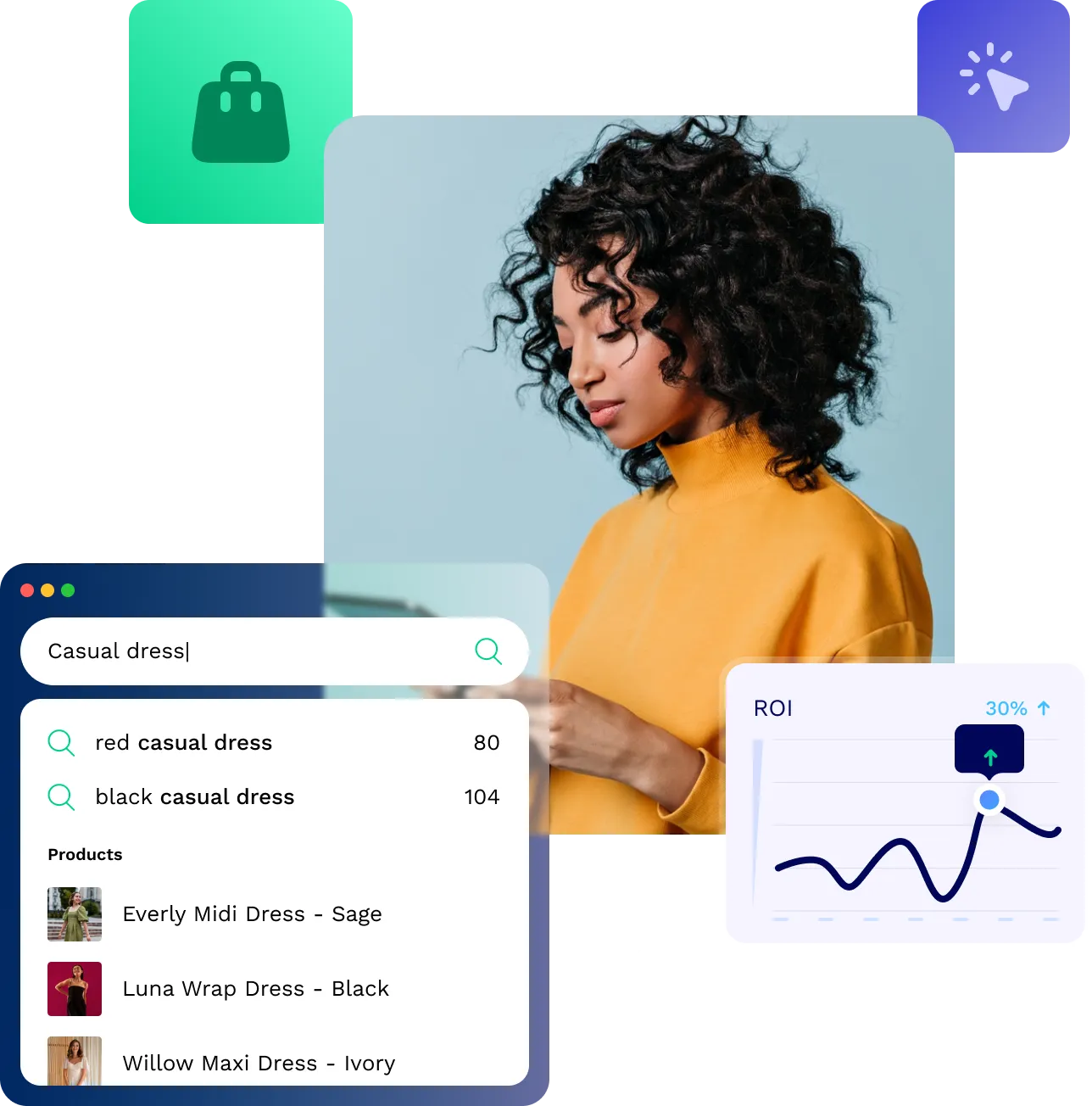

Intelligent Understanding

Marqo goes beyond keyword search with instant indexing, semantic relevance, typo tolerance, and multilingual comprehension, delivering accurate and meaningful results for every query.

Adaptive Journeys

Marqo adapts in real time to shopper intent and behavior. It seamlessly shifts between results, carousels, guided discovery, and conversational search to deliver a more refined, personalized shopping experience.

Strategic Automation

Marqo automates ranking, boosts, filters, and collections through AI, reducing manual merchandising work and enabling your team to focus on strategy while improving relevance, conversion, and revenue.

High-Conversion Search in 3 Steps

Install the pixel

Marqo's pixel can be installed in a single line of code and starts capturing user interaction data (clicks, carts, and purchases) to learn what your customers want and how they shop.

Train a search engine tailored to your brand

Marqo uses your product catalog, shopper behavior, and our proprietary LLM training framework to build a personalized AI search engine, tailored to your ecommerce brand and customers.

Plug in and go live

Deploy Marqo via API or with one-click integrations for Shopify, Adobe Commerce, and Salesforce Commerce Cloud.

KICKS CREW is redefining how the world discovers sneakers with Marqo

Sneaker fans find exactly what they’re looking for—by model code, drop, or style—and the outcomes speak for themselves.

+16.0%

Increase in revenue added to cart from search

+17.7%

Uplift in sitewide conversion rate

Marqo covers every angle where other solutions fall short

Ready to transform your product discovery?

Experience how Marqo’s AI-native search drives more revenue, better conversion, and happier customers

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)